I know of many companies that talk about making sure they are cloud agnostic. I know of only one or two that have made inroads into successfully doing so. With rare exception, I think it’s a complete waste of time and money.

Let’s start with what it means to be “cloud agnostic”. According to Looker.com: “[c]loud-agnostic platforms are environments that are capable of operating with any public cloud provider with minimal disruptions to a business. Businesses that employ a cloud-agnostic strategy are able to efficiently scale their use of cloud services and take advantage of different features and price structures.”

Total bullshit in practice, in my opinion, but gosh it sounds great. Not being tied to AWS, and not lining Jeff’s pockets more (side fact: the guy makes an Amazon worker’s median salary in approximately ten seconds. What…the…fuck…) would be cool. I’ve said many times before, Friends Don’t Let Friends Microsoft, but it would be good, on the surface at least, to have the ability to choose between it (Azure), Google (GPC), AWS or IBM (just kidding. Nobody would really choose IBM’s cloud). And as someone who, all things equal, tends to lean towards open source options (Apache Spark, for example), it seems reasonable. And to an extent, it is.

But here’s where the rub is: all things being equal, I’ll choose cloud native architectures over open source, because it’s ultimately going to be better supported, better integrated (a big one), better optimised for the cloud on which it runs, and in addition, it allows me to better exploit the pricing model of the cloud (another big one!). Maybe it’s obvious, but it’s my blog, so I’ll pick these a part and talk about each of them.

Better Supported

Emphasizing again, I'm a big fan of open source technologies. I spent a lot of years building out solutions in things like Ab Initio and it's a helluva solution, but it's a software company that is insanely expensive, and while it's very flexible, ultimately, it's a hammer, so everything looks like a nail. And it's insanely expensive. And it's really specialized, so talent is hard to come by, and in turn the decision is often to forego talent and fill seats, and even then, those seats are expensive to fill. And if I didn't mention, the software is terribly expensive. And maybe the worst thing is that the software is solely extended by the company. Don't get me wrong, specifically speaking to Ab Initio, they have some incredibly smart humans. In fact, the most intelligent person I've ever met works there and has for decades (that's a call out to you, Becky; you truly inspire me, and I'm forever in awe). But even for them, and for most companies that are run by mere mortals, that means you are largely giving up on the value that comes from the community. There is real power that comes from that community, from opening up your software to forking opportunities and master branch integrations. Sure, organizations are always open to suggestion, but those suggestions are more limited than what is being implemented (imagine I'm using application X to do a thing and it fails to have the full suite of capabilities I need. I'll have to find something else to fulfill my requirements). I'll essentially bastardize my pipeline to deliver the functionality. That workaround is unlikely to be communicated back to the software company, and even if it is, it may or may not be understood, it could fall on deaf ears, it may or may not be viewed as a good idea, and it may or may not remain the same solution if and when it does get implemented, and I certainly can't wait for that anyway.

Thus, if the language or application that I'm using to build my solution has the additional capabilities that come from the world at large (the community), and by extension and approachability, has a wider user group using it for more solutions (the software I mentioned before is significantly more prevalent in banks, insurance companies and telco, for example. Perhaps not coincidentally, aren't those the same industries where the mainframe is still found? Just sayin'), then I have more talent from which to choose, and because of the price point (free), it's going to be found more frequently in all industries, including non-profits, startups, and personal projects. In short, it's going to be closer to pure innovation. That talent is certainly more appealing than someone who has worked for years at Citibank. And I can say that because I worked there. Yep, I'll take open source over the alternative, if the alternative is a private software company, all things being equal. Except that I'm supposed to be arguing against open source here, because (I think) the point of this blog was about why I disagree with organizations pursuing cloud agnosticism. I realize I appear to be talking out of both sides of my mouth, but stick with me. My arguments might not get better, my writing certainly won't, but I have more to say.

So, yeah, I would choose open source over a private software offering, but I'm actually going to argue for a cloud service (which is to say, a service offering that is cloud provider specific) over open source, all things being equal, and in this section, I'm saying it's better supported, because all the major cloud providers are "eating their own dog food": GPC runs about a trillion offerings (and make no mistake, that list is only their public stuff. My completely guessing self imagines that represents probably about 10% of the things they're doing), amazon.com runs on AWS, and all-things Microsoft run on Azure (which seems both unfortunate and fitting). And in addition to those well known products, millions of other companies run on their clouds. They've got massive incentive to make sure their platforms offer elegant, scalable, secure, flexible, integratabtle, attractive solutions. Thus, it's ultimately the combination of benefits of the community (the major scalable products running on the cloud; the eating of the dog food), and closely related, the integrations. So let's explore the integratabtle argument...

Better Integrations

If my reason for choosing a technology that is open source is because I want to remain cloud agnostic, and a same-or-better solution exists as part of a service offering from the cloud, I am making a subpar architectural choice and I'm hurting the solution for something I don't believe to be worth it. Let's use a practical example to illustrate. Imagine I've chosen to use Apache Cassandra because I need distributed/scalable key/value store and because I want to remain open source. Cassandra is impressive technology, no doubt. We used it at Netflix for our customer base, and Netflix has a ton of customers. Purely from a technology standpoint, okay, I get it. But I disagree with it.

With this decision, I'm going to stand that Cassandra ring up on clusters in AWS (or enter your cloud provider name here). Not only have I decided to make Cassandra my key/value technology choice, but I've been forced to have to have EC2 instances, and in turn I've also (as a means to an end) introduced VPCs, and subnets, and internet gateways and security groups and a bunch of other shit, and I've in turn decided that I'd deal with things like scalability and multi-availability zones and multi-regions and failover and everything else that comes out of the box if I'd chosen to go with something like DynamoDB. And on top of all of that, DynamoDB completely integrates out of the box with all the other AWS services, so my development times are massively increased comparatively when I make the open source choice. And that doesn't even start to include now needing things like Aegisthus when I want to do any sort of batch operations to extract data in bulk (if I wanted to batch ingest into my data environment, for example).

Well, shit. That escalated quickly. All this because I wanted to remain cloud agnostic. And, to be sure, this is just the backend for (e.g.) my mobile application. Every other decision I make for this same reason is going to have the same/similar ramifications, so the complexities, security risks, and costs are going to get exponentially worse with each layer I add to the onion (Captain Obvious says: onions should be pealed, not layered).

One of the incredible opportunities the cloud affords us is in prototyping and innovation. Long gone (thankfully) are the days of writing proposals to get sign off from the Architecture Lords to allow my proposal to land on the desk of someone who has the power of the pen to sign the check, and assuming that the Architecture Lords allow me the opportunity to be heard, and assuming the Check Signing Lord not only agrees, but has some extra budget that he or she will toss my way, and assuming the Project Manager Lord and Team Manager Lord have some resources to work on it, I might get the chance to try something. To be clear here: all these Lords signing off on something means two things have happened: (1) this idea has to have a massive chance of being successful and moving the proverbial needle AND (2) I haven't upset any of the lords. And let's be honest here, the second one is a non-starter. I've inevitably pissed them off. All of them. Multiple times each. Probably multiple times since lunch. I contend they had it coming, and they should appreciate my honesty and frankness, but if they do hold silent grudges, I'm screwed. Anyway...

In the cloud, I can do this all of this for free, or really, really close. Imagine that I wanted to try and use a graph database for something. In the old days, with the Dickhead Lords, after all my begging, pleading, crying, yelling, and back-office shenanigans, I finally got my server and installed Neo4j and fingers crossed it worked (in actuality, it had to, because the Dickhead Lords wouldn't give me another opportunity if it didn't), but it took weeks/months/quarters, and if it did't work, then this server is going to be tough to justify. In the cloud, I just did a prototype on Neptune. Took an afternoon. And the costs are negligible. And they end after I terminate the service.

And that, if I could pick just one thing out of all the capabilities the cloud offers, from a pure innovation standpoint, is the biggest differentiator. By suddenly having essentially every technical offering available, creates unparalleled innovation capabilities like nothing else. No longer is innovation missed out on because the risk of introducing new technologies and procuring infrastructure makes it a non-starter, and this innovative capability is available to organizations of all sizes (and equally, to individuals. I can, and do, things like this all the time in my own AWS account). The free tiers are incredible!

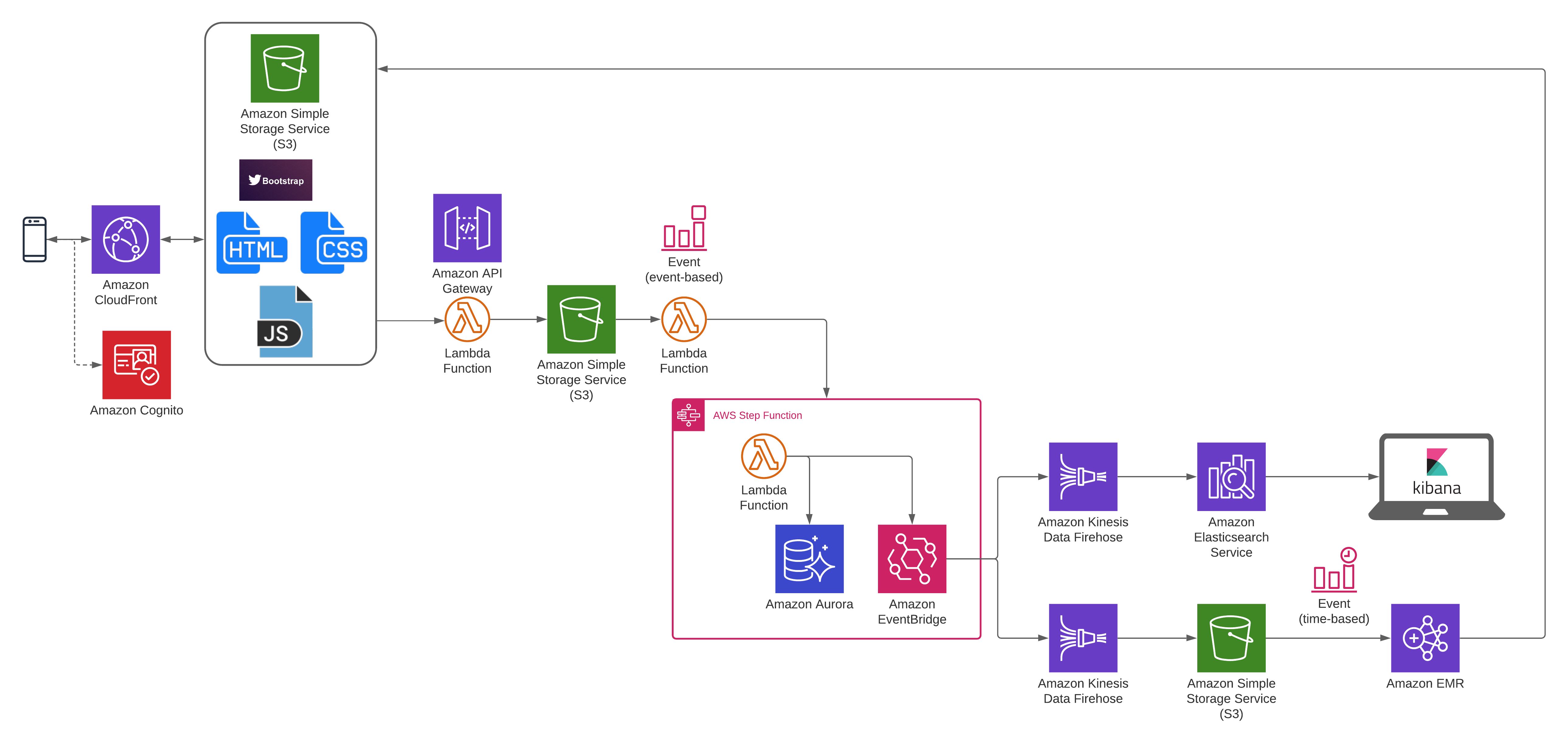

I think I strayed there. Oops. What I was really talking about was integrations. And that's huge, even if I've not really talked about it yet. Take, for instance, AWS (full disclosure, I've been working in AWS for more than a decade now, and I'll focus almost solely on that provider here in all my examples). Imagine I'm building out a solution that needs to react whenever, say, an image gets uploaded to my website.

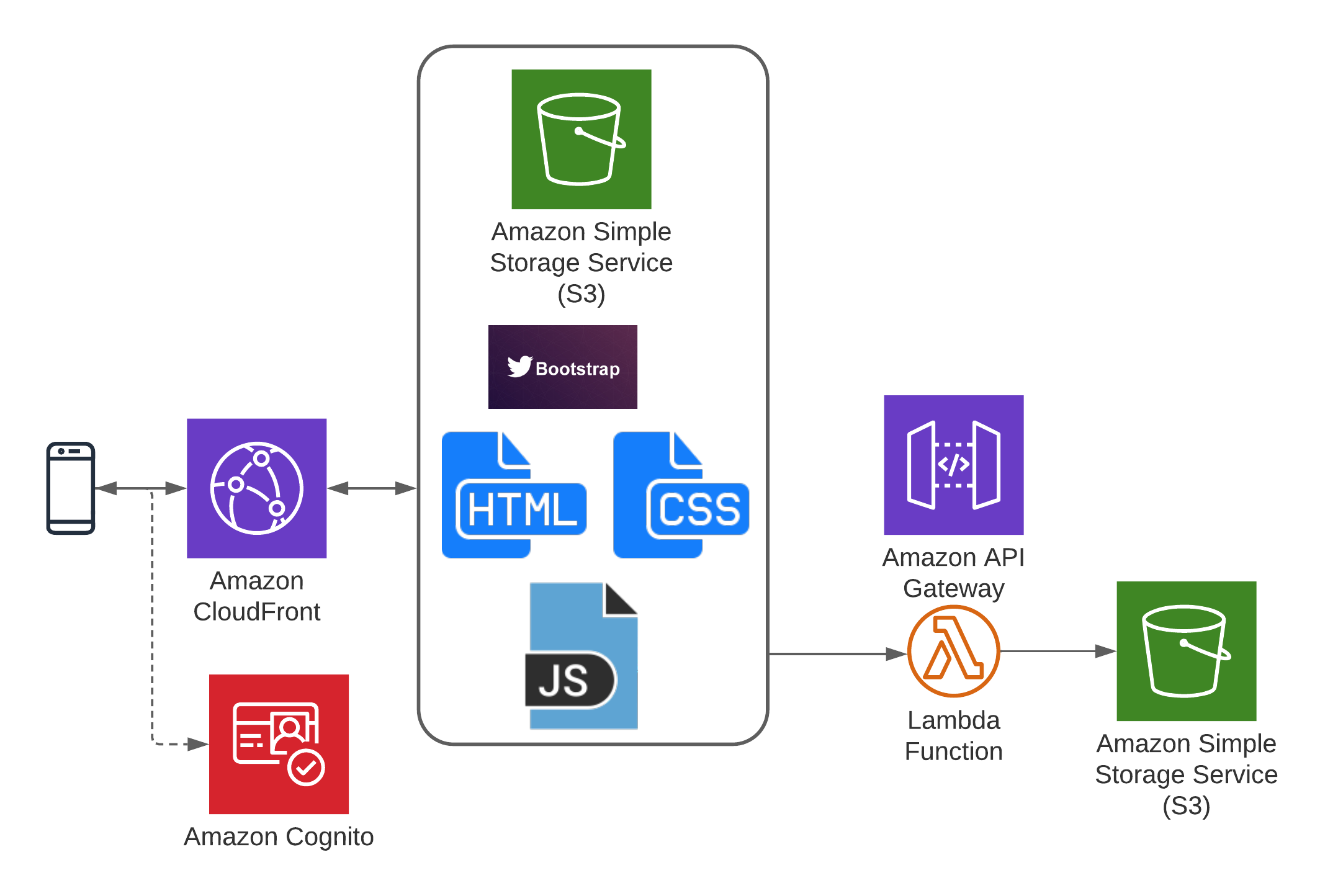

Let's quickly talk about how we might build this out. Perhaps my website is static, and I've used (open source) a Bootstrap theme, and I've put it on S3. Perhaps I used CloudFront for my CDN, because, you know, my website definitely needs to be global because it's so cool. I also need to integrate some OAuth. Might as well use Cognito because, well, it's easily integrated, as another AWS service. My website is the usual suspects: HTML, CSS and Javascript. The latter initiates RESTful API calls, which, behind the scenes, are Lambda functions (AWS, easily integrated) with API Gateway to perform the image uploads.

And with this set of capabilities at my fingertips, I get to highlight a specific finger to the Dickhead Lords.

So anyway, yeah, integrations are pretty freakin' important to me, and they should be for you, too! And what that architecture ends up being is cloud native. And I've talked about how much that matters to me previously, and it should be important to you, too! So there.

Better Optimized

This one is short, because it's largely been covered already, both in the dog food discussion and the integration discussion. Basically, these service offerings are designed to run on their native cloud, so of course they're optimized for it. There are some exceptions, like Glue, which I think is absolutely brutal. They took such a powerful offering in Apache Spark and dumbed it down so much that what is left is a shell of the whole. But that fails in comparison to the super-turd that is QuickShite. I swear, it's like AWS felt like they were helping their customers too much, so they introduced this "offering". I've used this abortion of an application three times over the past roughly five years, and it never really improves and it always leaves me feeling like I wish AWS would make a real investment in data visualization, like I will stand up my own Tableau server and use a grown up application, or like I should just eat the additional complexities and do it all in D3.js.

Anywho, since all of these applications are so well integrated together, they're obviously going to make the investment in assuring that those integrations are optimized. Similarly, introducing a bootstrap action or adding a job to a running EMR cluster is optimized, because EMR is the Hadoop offering, and because AWS realizes that folks need to do shit like add jobs to running clusters, so they've optimized it. I've quickly become a huge fan of GraphQL. Have you seen the integrations and optimizations with AppSync? Makes me feel like it'll take a lot to convince me to ever go down the API Gateway route again, unless I have to. Truly impressive. And the list goes on and on. I can't easily (if at all) do these types of optimizations on my EC2 instances that house my Cassandra ring, or the ones where I put MongoDB or Solr. Again, those are all fine open source offerings, but the optimizations can't exist the same way because the investment in optimizing them isn't the same.

All right, horse beaten.

Exploiting the Pricing Model of the Cloud

With the cloud, exploiting the pricing model can often be simply measured by the ability to bi-directionally scale, or be "elastic", when pairing this capability with decoupled disk and compute. Being elastic is impossible when it comes to disk, since information on disk is, well, on disk. If I have a terabyte of data on S3, assuming nothing else, tomorrow I have a terabyte of data on disk. And the next day, too. Luckily disk is practically free, so I can live with this inevitability, but I don't have to live with it for compute, and optimized services within the cloud provider don't, either (usually). For Lambda, for instance, I pay for what I use. I'm not paying anything for Lambda functions if they're not being invoked. I'm not paying for readers or writers in DynamoDB if there aren't reads or writes happening. I'm not paying for notifications on SNS if notifications aren't being sent/received. I can scale to zero and scale to millions (again, bi-directional scalability, or elasticity).

So the best I can do with the cloud is to pay for what I use, and only what I use, and to limit my usage to compute that exists only when it is demanded (I've ranted about RDBMS before). I don't want servers plugged in (meaning: I don't want to be paying for them) unless I have to use their compute (and I only want to pay for the compute I'm using, not the computational capacity that is available). And I am going to optimize my disk usage (even though it's practically free) by taking advantage of things like Glacier, where possible.

And I simply cannot do any of this in a fully cloud agnostic architecture. My disk is tied to something I'm paying for. Probably my Linux server or EC2 instance. And then I've tightly coupled my compute and storage. I see where this is going: to me getting all ornery.

Okay, now that I've got to have some servers to give me disk, and I figured I might as well use the disk associated with the servers that I need to house my open source technologies, and now I'm uni-directionally scalable (I can only realistically ever scale out, and it's going to be expensive), and it's not going to easily or out-of-the-box integrate with much else, at least without some more engineering heroics, but at least it won't be optimized. Thank goodness it's going to be more expensive. But gosh, it's worth it, in case I want to use Azure because my life was otherwise happy enough.

But There are Advantages to Being Cloud Agnostic

There are. Not discounting that. It's a fact. Not being tied to a cloud provider means if one provider has an offering that the other doesn't (or the price savings of one makes it advantageous), I can have the best of both worlds. That's huge. And if I sit here and piss and moan about all these things around purity and benefits of cloud-native solutions and optimizations and the like and pretend that being able to take advantage of the right tool (or application) for the job isn't huge, than I am completely full of shit. Full transparency, I am full of shit. Just not completely.

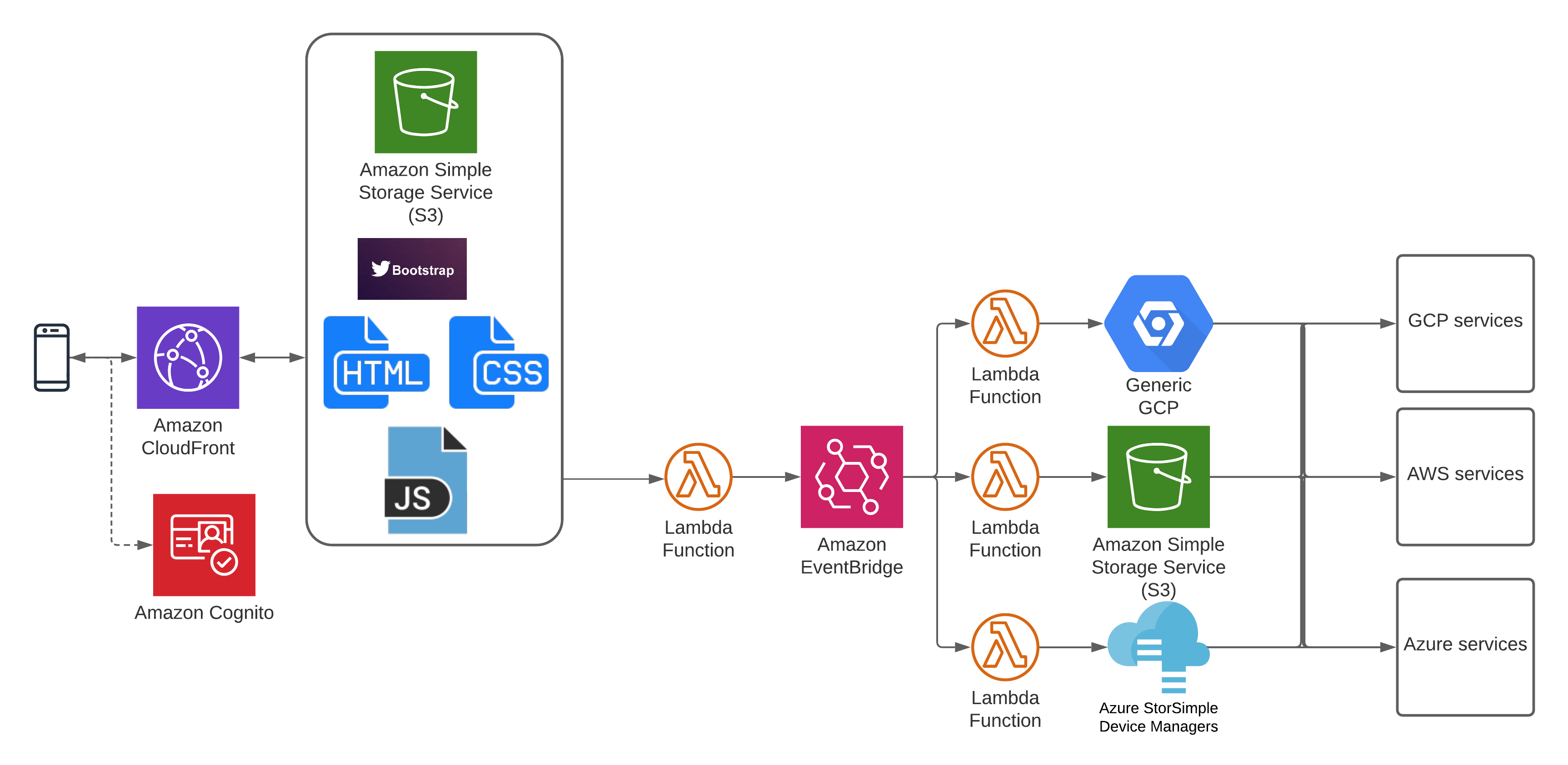

So what is the easiest and best way to be cloud agnostic without going down a path in which I'm not taking advantage of the full suite of offerings that a given cloud provider affords? First, we can likely agree that every cloud provider essentially offers the same general set of services. This is important because it means that despite my anti-Azureness, if I were forced to use it, I'd still be able to build the same architectural solutions.

I already made the argument for decoupling compute and disk. Looking in part through that lens, then, what I tend to prefer, if I need to check the box of being cloud agnostic, is to decouple my disk and compute and tie functionalities to cloud providers where disk is at the center.

By taking this approach, I'm essentially leveraging cloud providers for certain functionalities, thereby taking advantage of the services each cloud offers. For instance, a lot of my Data Science friends prefer the data science related offerings of GPC. Great. If those tools/applications can read data from S3, then we're golden (or, similarly, if AWS services can read data from Google Storage).

The important nuance here is that I'm not building the same capabilities and functionalities in all clouds, rather, I'm using each to my advantage on a functionality by functionality basis, and recognizing further that I have the ability, if I ever decide to completely move away from one cloud provider, to replace their functionality with another one, in a different cloud. Make no mistake, though, in the latter scenario, I'm rebuilding my solution in a different set of services (specific to the other cloud provider).

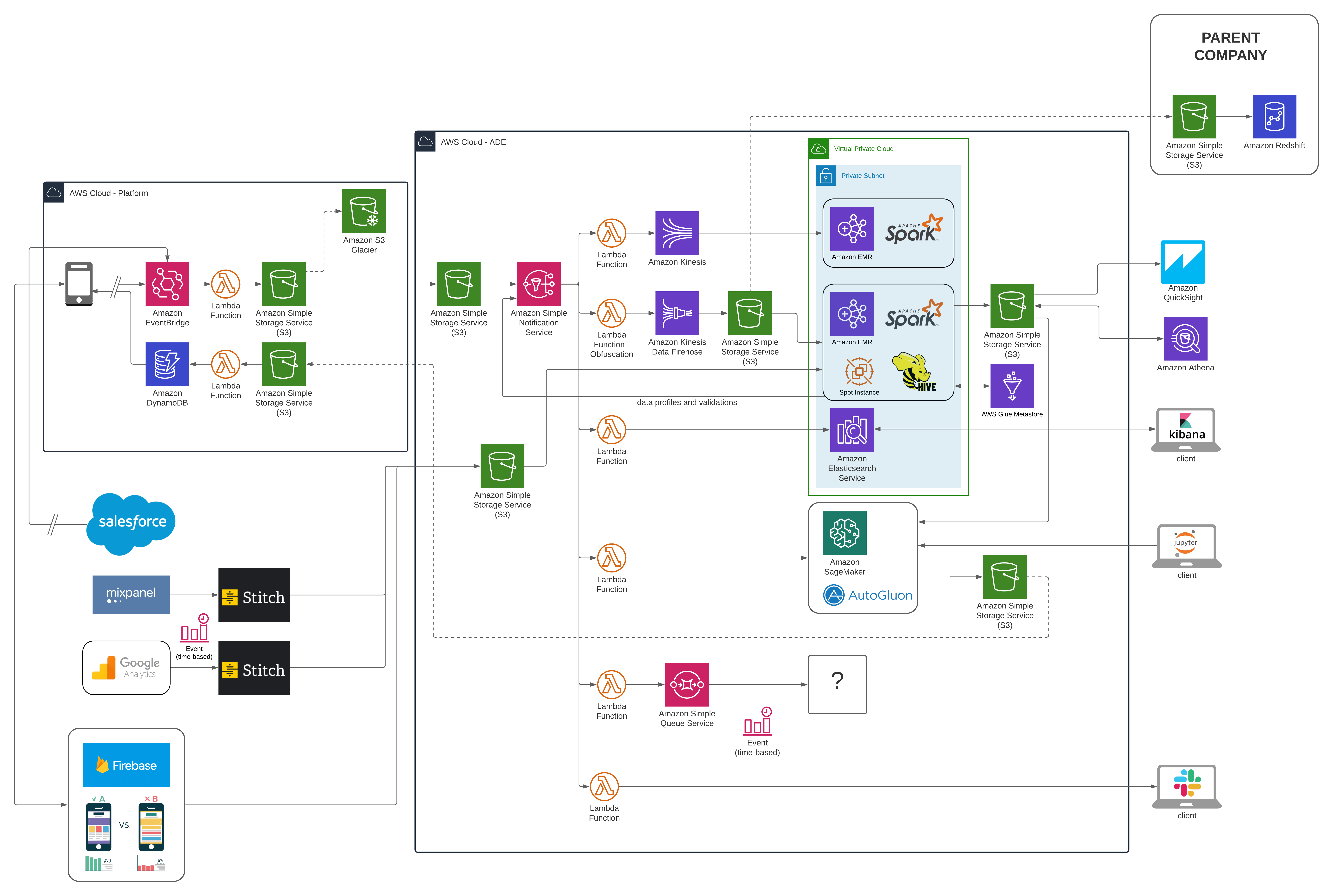

The image below illustrates, at an admittedly very high level, how this architectural approach might look (and this has to be my worst diagram ever, and that's saying something. If you correctly thought I was a horrible writer, you should see my drawings. Leave me alone):

Conclusion

All right, I have to sum up a few thousand words into some sort of tl;dr, and I guess this is it:

If you tell me that you want to be cloud agnostic because you want to use the right application for the job, and there are certain capabilities in one cloud that are so much better than all the rest, okay, I can dig that. And I'll tell you that the right way to do that is (a) choose a base cloud provider for almost everything because the integrations and optimizations are incredibly valuable, and (b) stick with cloud services wherever you can because they're usually really well supported, and your application can benefit over time by leveraging the enhancements that the provider is going to add to them, and (c) make sure you're decoupling your disk and compute, so you can tie the alternative cloud offerings to the disk, and take advantage of the best tool for the job, and if worst-comes-to-worst, accept the fact that you're going to rewrite parts of your application that were dependent on the base cloud provider. But, I've never actually seen that happen. Ever.

Joel

Joel